Over the last decade, the automotive industry has witnessed rapid growth in Advanced Driver Assistance Systems (ADAS) and Autonomous Driving (AD) solutions, driven primarily by the progress in AI. In fact, the autonomous vehicle is often cited as an example of AI in action.

This article discusses AI techniques being implemented across the various areas of Autonomous Driving (AD) development

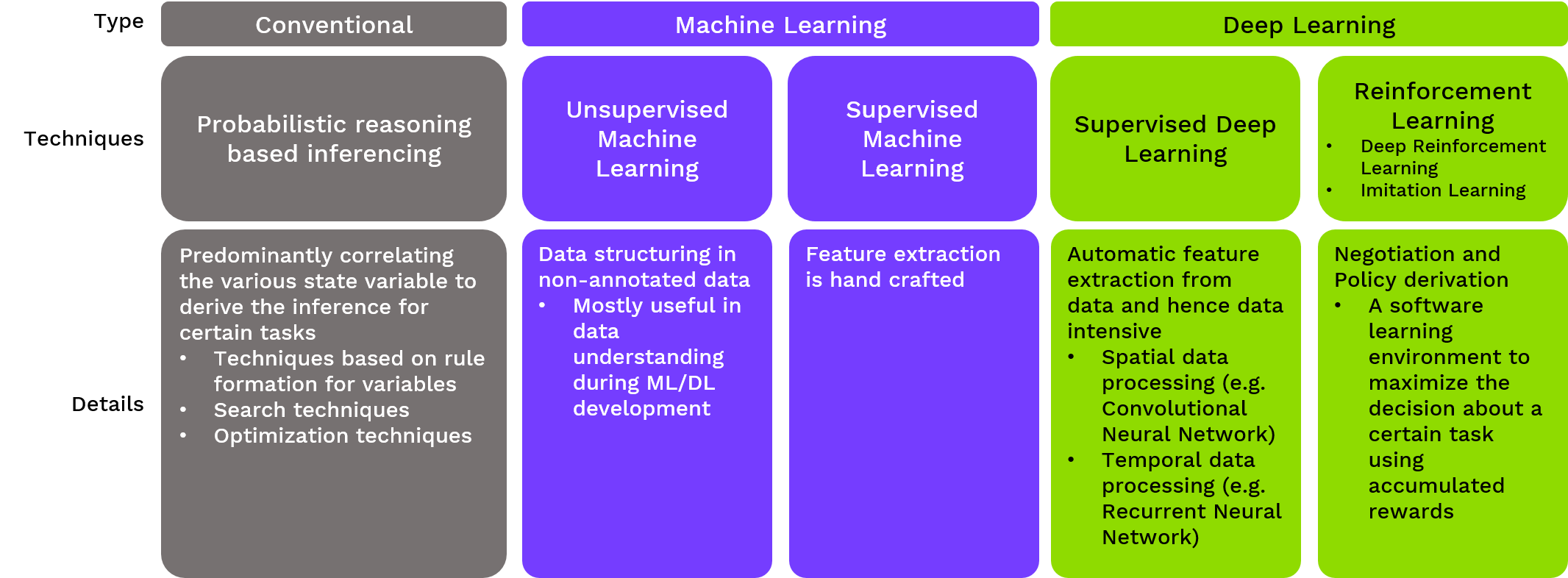

Artificial Intelligence consists of various techniques and one must implement one or a combination of them to achieve the desired solutions. One or combination of AI techniques mentioned in Illustration 1 contributes towards the realization of components or features required for Autonomous Driving.

Illustration 1 – AI Techniques

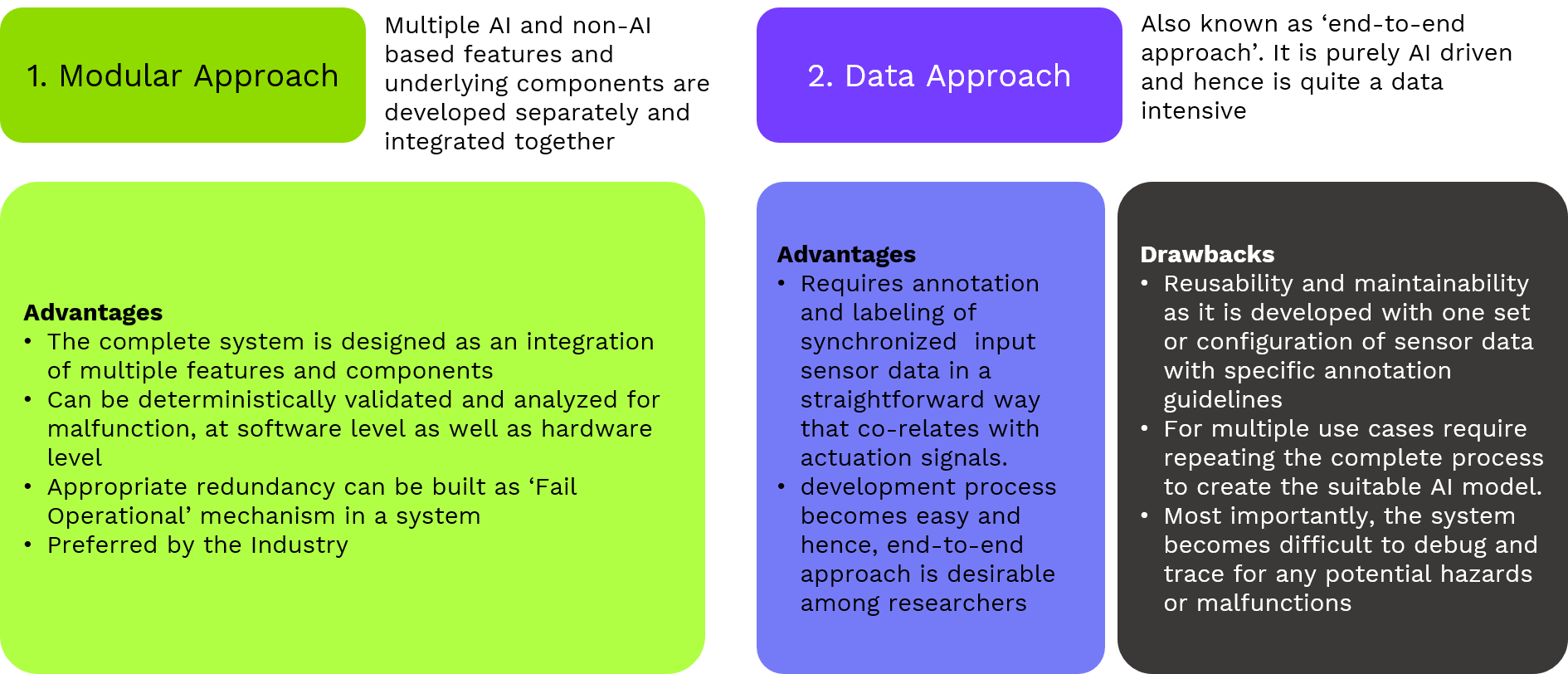

Illustration 2 – Modular Approach VS Data-Driven Approach

The first, Modular Approach is where multiple AI and non-AI based features and underlying components are developed separately and integrated together e.g. Object Detection (AI) module is augmented by other sensor data processing modules that are non-AI modules like Object Tracking and Fusion. The other school of thought professes an ‘end-to-end approach’ which is purely AI-driven and hence is quite data-intensive and so is called ‘Data-Driven’.

Since the end-to-end or Data-Driven approach, is AI-driven, it requires annotation and labeling of synchronized input sensor data (Camera, Radar, LiDAR, GPS, IMU) in a straightforward way that co-relates with actuation signals like steering, braking, etc. This combination provides necessary instructions or actuation for the vehicle to maneuver. In this method, the entire development process becomes easy and hence, the end-to-end approach is desirable among researchers. However, this approach suffers from reusability and maintainability as it is developed with one set or configuration of sensor data with specific annotation guidelines. Adapting this approach for multiple use cases requires repeating the complete process to create a suitable AI model. Most importantly, the system becomes difficult to debug and trace for any potential hazards or malfunctions. This causes to lose its determinism owing to the complexity of a multitude of scenarios it caters to.

Thus, the industry prefers a ‘Modular Approach’ where the complete system is designed as an integration of multiple features and components. This way each feature and its underlying components can be deterministically validated and analyzed for malfunction, at software level as well as hardware level for desired functionality. Accordingly, appropriate redundancy can be built as a ‘Fail Operational’ mechanism in a system which becomes functional in case of a failure to ensure that vehicle comes out of mainstream traffic and parks safely. This is called a Minimum Risk Maneuver. This is of paramount importance as the Autonomous Driving system is a safety-critical system, which is covered under the Functional Safety Standard – ISO26262.

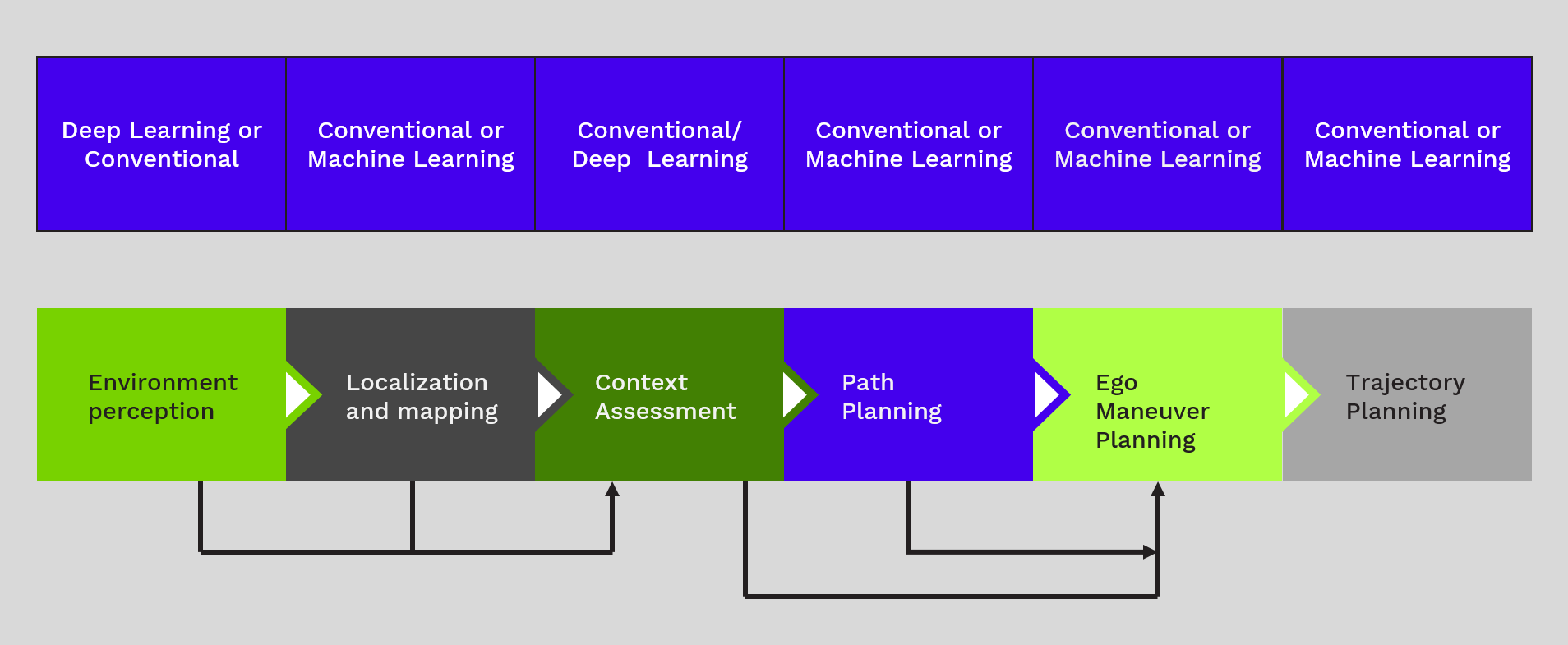

Let us now have a detailed look at the basic building blocks or modules of Autonomous Driving system architecture and how a combination of AI and conventional methods are implemented to realize them.

The Environment (Scene) perception module is responsible for sensing and measuring the surrounding environment in 3D i.e., the real-world coordinates. Deep Learning is well suited for this task. Nowadays, various real-time Deep Learning (DL) or Machine Learning (ML) architectures for 2D and 3D object detection and recognition are being deployed to understand the scene in a rigid manner. Another DL approach, Semantic Segmentation, is useful for understanding scenes with reference to traffic occupants, traffic governing objects, and other contextual but non-rigid obstacles in the scene. The fusion of such multi-modal sensory perception is carried out using conventional methodology via probabilistic reasoning. The confidence of spatial detection and temporal continuity of detections are crucial measurements for such fusion. Though the perception approaches remain similar across the applications, the variants of sensing technology (Camera, LiDAR, RADAR, Ultrasonic sensor) and its configuration topology makes the application different for every Vehicle Manufacturer (OEM) / and their suppliers (Tier1s). Needless to say, it also requires pruning and customization of adopted AI approaches with reference to selected hardware.

Image 1: Semantic Segmentation helps understand the surroundings

A vehicle, equipped with Autonomous Driving features also called the Ego Vehicle, gets its positioning in a 3D environment sensed from perception modules as well as mapping information via the Localization and Mapping module. Map information is either extracted by processing data from sensors or from HDMap. Though there is some progress in the prior art for deep learning-based localization, those are still influenced by conventional techniques of SfM (Structure from Motion) due to its computational efficiency on embedded platforms. These methods are essentially coupled with odometry signals of ego vehicle captured using inertial measurement units.

Image 2: The Ego Vehicle uses High Definition (HD) Maps to understand its position

This is a heuristic analysis and modeling technique for time sequence data generated from perception and localization and hence is originally a rule-based module. Generally, conventional AI techniques of causal analysis for random variable processes namely, Markov Chain modeling and its variants, were being used which requires heavy computations and large memory to store contextual existence for every detected traffic occupant. Even the formulation of a direct acyclic graph to form relationships across identified input variables is very specific and time-consuming. This increases the complexity, as large number of direct variables and derived variables are considered for designing a solution. Hence, various machine learning and deep learning techniques related to time sequencing modeling, Sequence-to-sequence modeling, Recurrent

Neural Networks (Long Short-Term Memory) are being adopted to formulate a deterministic and generic relation between input and output. This has an advantage over the conventional approach that it is easier to establish the contextual relation of traffic occupants with ego vehicles in temporal data sequence which is used for training the AI model.

Image 3: The Neural Network provides an output to help understand where the Ego Vehicle will be and where the preceding vehicle will be

Last but very important module of path planning and ego vehicle’s behavior arbitration is non – trivial planning from source to the destination wherein static and dynamic surroundings and related context is analyzed to identify the collision-free path among multiple options. Thus, it is an unstructured multi-agent environment analysis wherein ego vehicle applies negotiation skills with other traffic occupants to generate a contextual driving policy for going straight, turning left or right, merging into traffic, overtaking, giving way, etc. Though there are deep reinforcement learning and imitation learning methodologies that are being experimented with reference to the simulated environment and real recorded driving data respectively, they have its own pros and cons. This paves way for conventional AI methods which are suitable for autonomy types in various scenarios. Trajectory planning which takes this path planning results as input, is typically dominated by traditional model-based control techniques which generate control actions by solving an optimization problem with a fixed number of variables however, increased complexity in terms of unforeseen situations, more number of meta parameters, induces the need of combining the A-Priori Model (a traditional model) with newer time series analysis based AI model (non-linear dynamic model) which helps in attaining more stability with reduced design efforts.

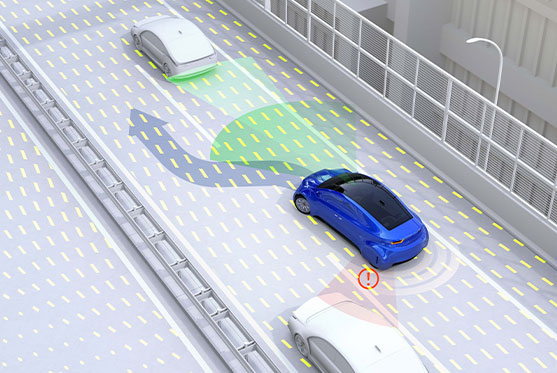

Image 4: A collision free Path is identified from source to destination

Figure 1 – Use of AI in each module of AD development

Figure 1 – Use of AI in each module of AD development

Thus, various AI techniques are used to build all the modules or components of the AD system leading to the next step of ensuring that all of it works in the vehicle. The activity of making the AD solution work perfectly in the vehicle brings its own set of challenges and is another area where AI is used extensively

There are powerful GPU servers available to carry out most of the offline AI activities of data generation, training, however, the deployment of the AI, specifically machine learning and deep learning, the solution is not a trivial task. The throughput (Accuracy with minimal computations), power consumption, and price become a bottleneck when it needs to be deployed on edge devices in a vehicle. Efforts to optimize computation, as well as memory footprint (number of learned model parameters), are important in autonomous driving. Accordingly, designing the smaller and efficient architectures and optimizing the current state-of-the-art architectures for real-time operation by AI model pruning techniques namely, channel pruning, weight pruning, sparsification, quantization, are on the rise. Many of the semiconductor vendors (Intel, Nvidia, TI, Renesas) are coming up with a comprehensive toolchain specific to their hardware architecture (CPUs coupled with Graphics Processing Units /Neural Processing Units) which facilitates auto-optimization of AI components to reduce time to market. Some of the leading organizations in AD have proposed to used FPGA, ASIC for AI solutions predominantly for perception solution leading to its benefits of flexibility, power consumption, and functional safety.

In summary, AI plays a key role in autonomous driving and is getting molded to accommodate domain-specific challenges viz: On-the-Edge computation with smaller memory footprint and accuracy, Functional Safety, Validation, and Verification, etc. while at the same time providing a plausible solution in various areas of:

We at KPIT are implementing all these AI techniques in multiple client programs. A combination of deep domain knowledge, expertise on AI, and exposure to large client ADAS/AD programs ranging from L2 to L4 and above makes KPIT a partner of choice for Autonomous driving development and related system integration.

Subject Matter Expert, Artificial Intelligence and Computer vision

KPIT Technologies

Recommend

Recommend

45 likes

Artificial Intelligence

Autonomous Driving

Recommend

Recommend

45 likes

Connect with us

KPIT Technologies is a global partner to the automotive and Mobility ecosystem for making software-defined vehicles a reality. It is a leading independent software development and integration partner helping mobility leapfrog towards a clean, smart, and safe future. With 13000+ automobelievers across the globe specializing in embedded software, AI, and digital solutions, KPIT accelerates its clients’ implementation of next-generation technologies for the future mobility roadmap. With engineering centers in Europe, the USA, Japan, China, Thailand, and India, KPIT works with leaders in automotive and Mobility and is present where the ecosystem is transforming.

Plot Number-17,

Rajiv Gandhi Infotech Park,

MIDC-SEZ, Phase-III,

Hinjawadi, Pune – 411057

Phone: +91 20 6770 6000

Frankfurter Ring 105b,80807

Munich, GERMANY

Phone: +49 89 3229 9660

Fax: +49 89 3229 9669 99

KPIT and KPIT logo are registered trademarks | © Copyright KPIT for 2018-2025

CIN: L74999PN2018PLC174192

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

Leave a Reply